Very large

The idea of extremely large is constantly shifting, evolving. As time passes by we quickly adopt its new numeric definition and only rarely, with a mild sense of amusement, recall the old one.

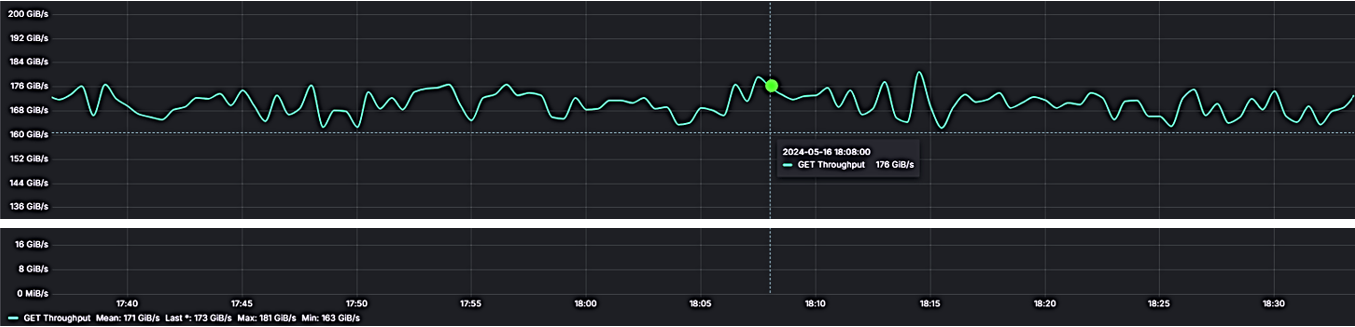

Take, for instance, aistore – an open-source storage cluster that deploys ad-hoc and runs anywhere. Possibly because of its innate scalability (proportional to the number of data drives) the associated “largeness” is often interpreted as total throughput. The throughput of something like this particular installation:

where 16 gateways and 16 DenseIO.E5 storage nodes provide a total sustained 167 GiB/s.

But no, not that kind of “large” we’ll be talking about – a different one.